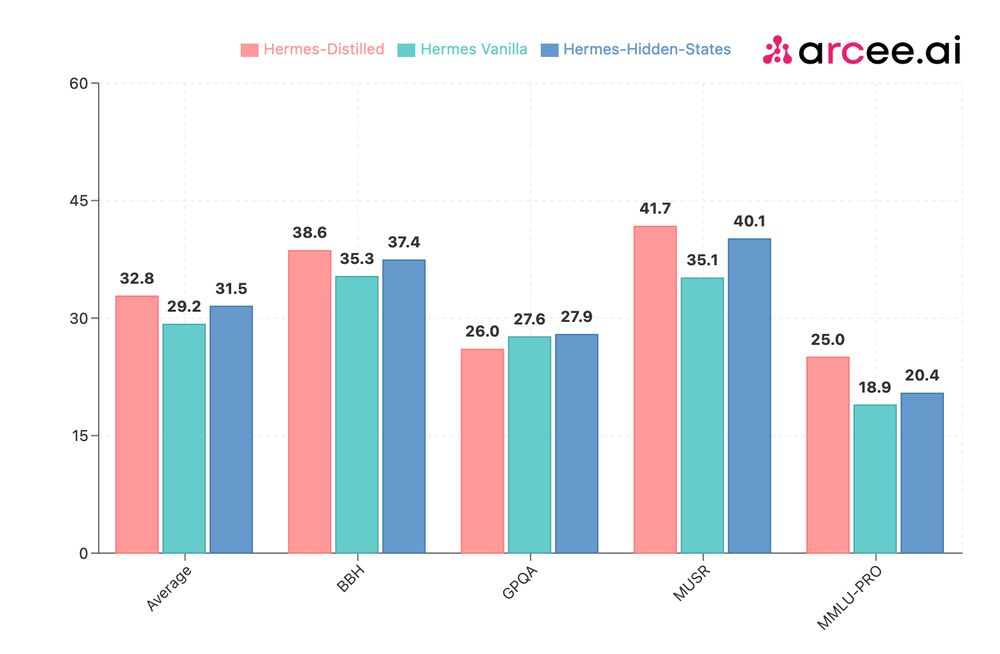

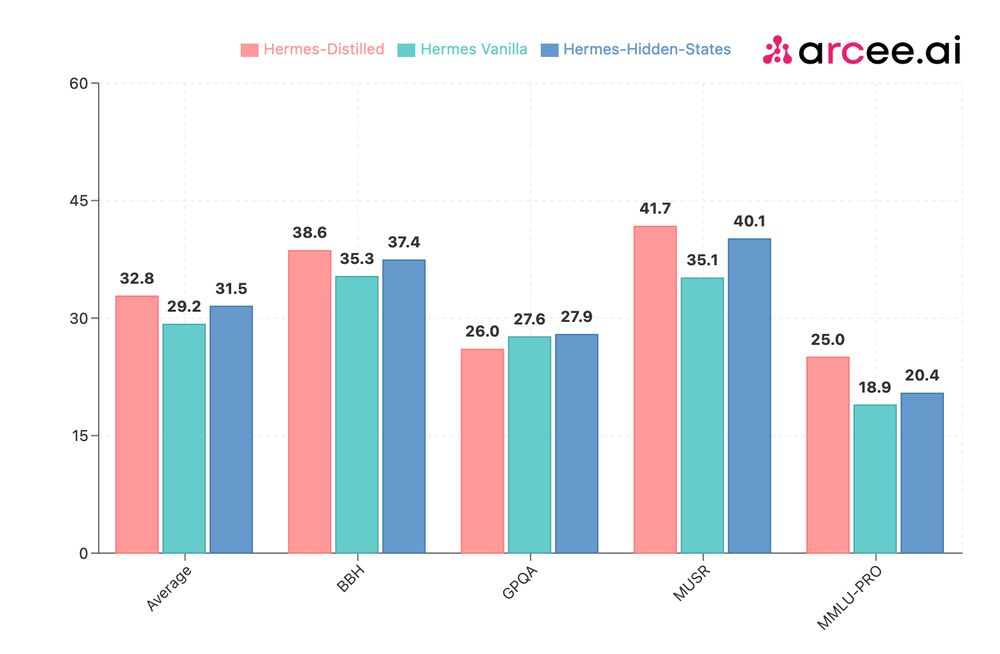

Distillation Experiments

Experiments comparing Distillation to Finetuning

September 1, 2024 · 2 min read

Experiments comparing Distillation to Finetuning

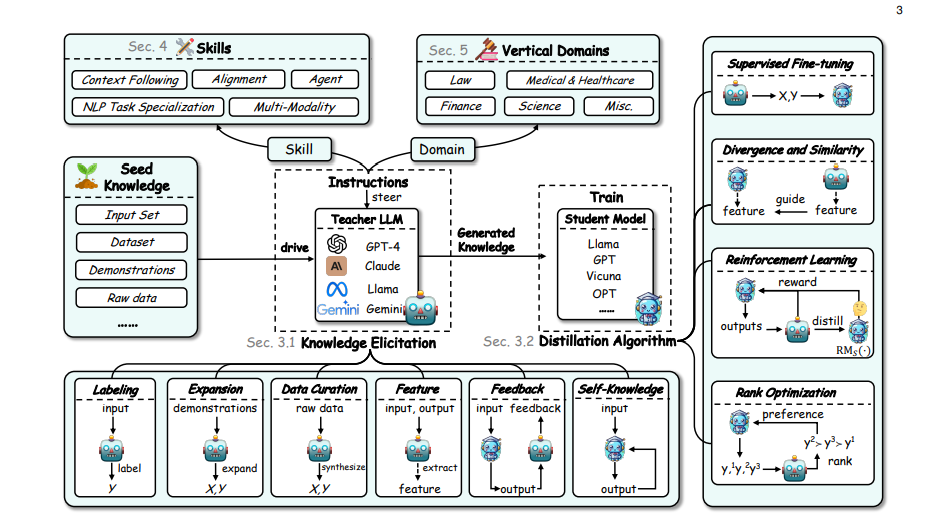

Distilling Large Language models in Small Language Models!

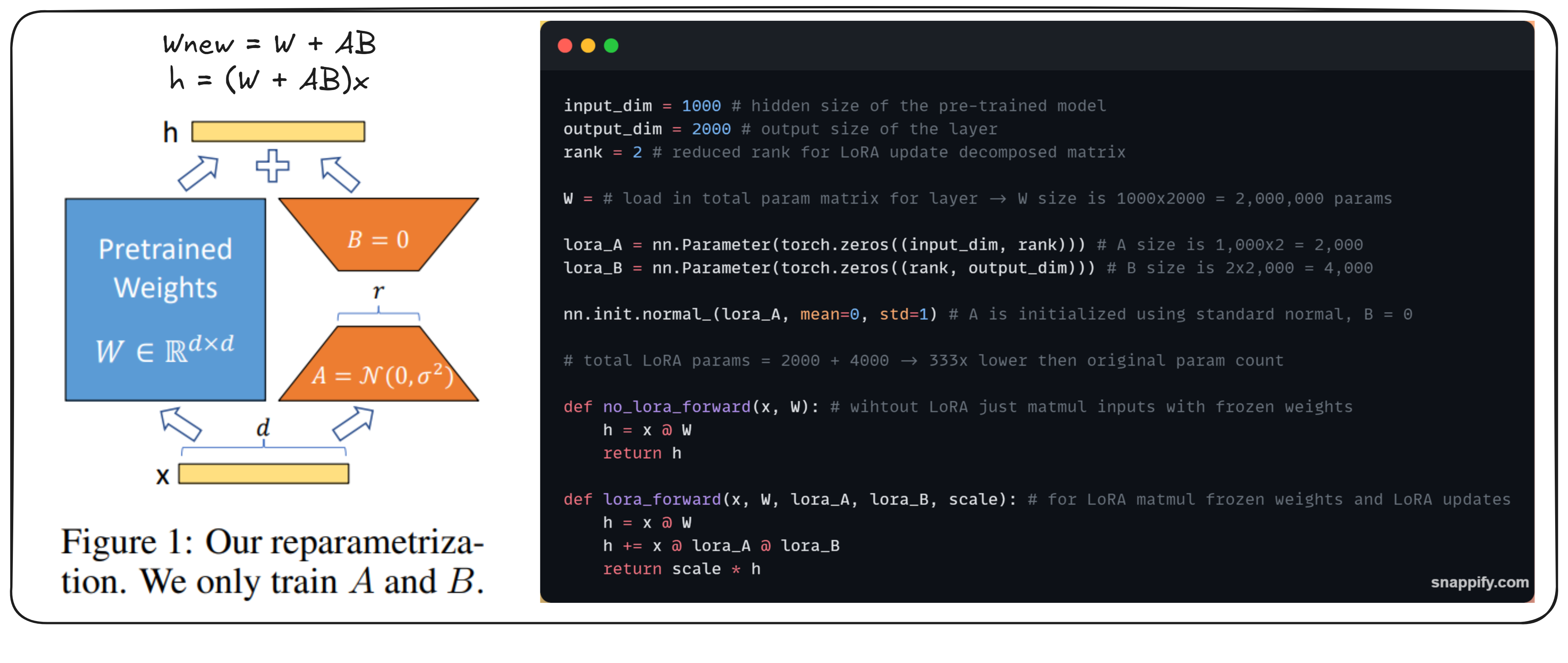

Tuning LLMs by only learning a fraction of weight updates!

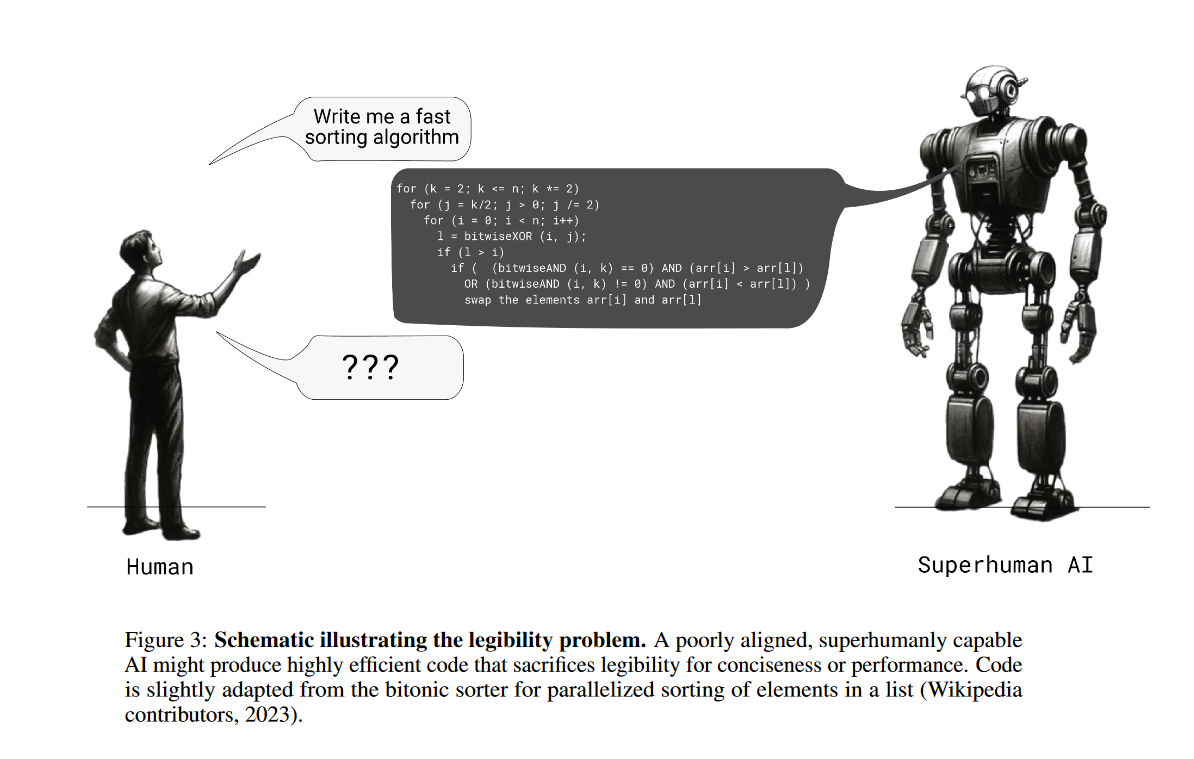

Optimizing to improve legibility of an LLMs output!

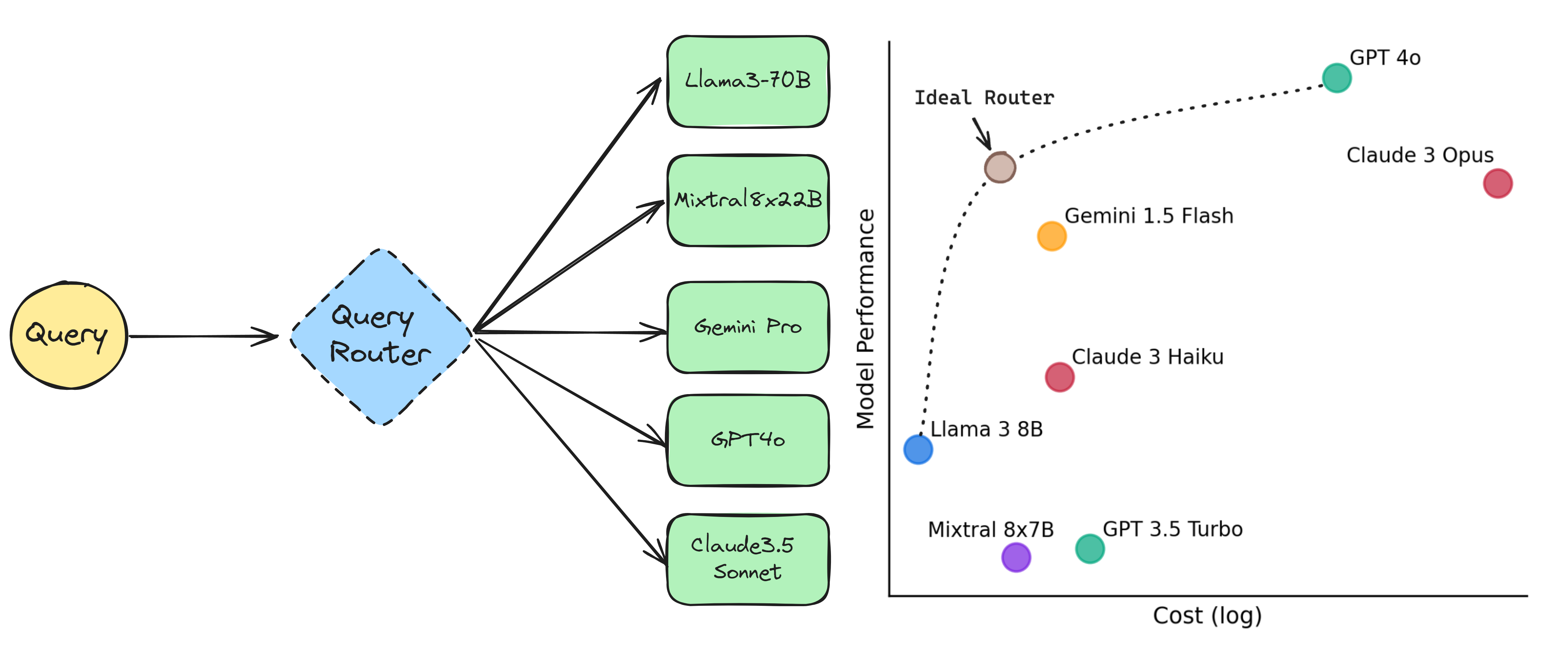

Route between LLMs to optimize cost and quality!

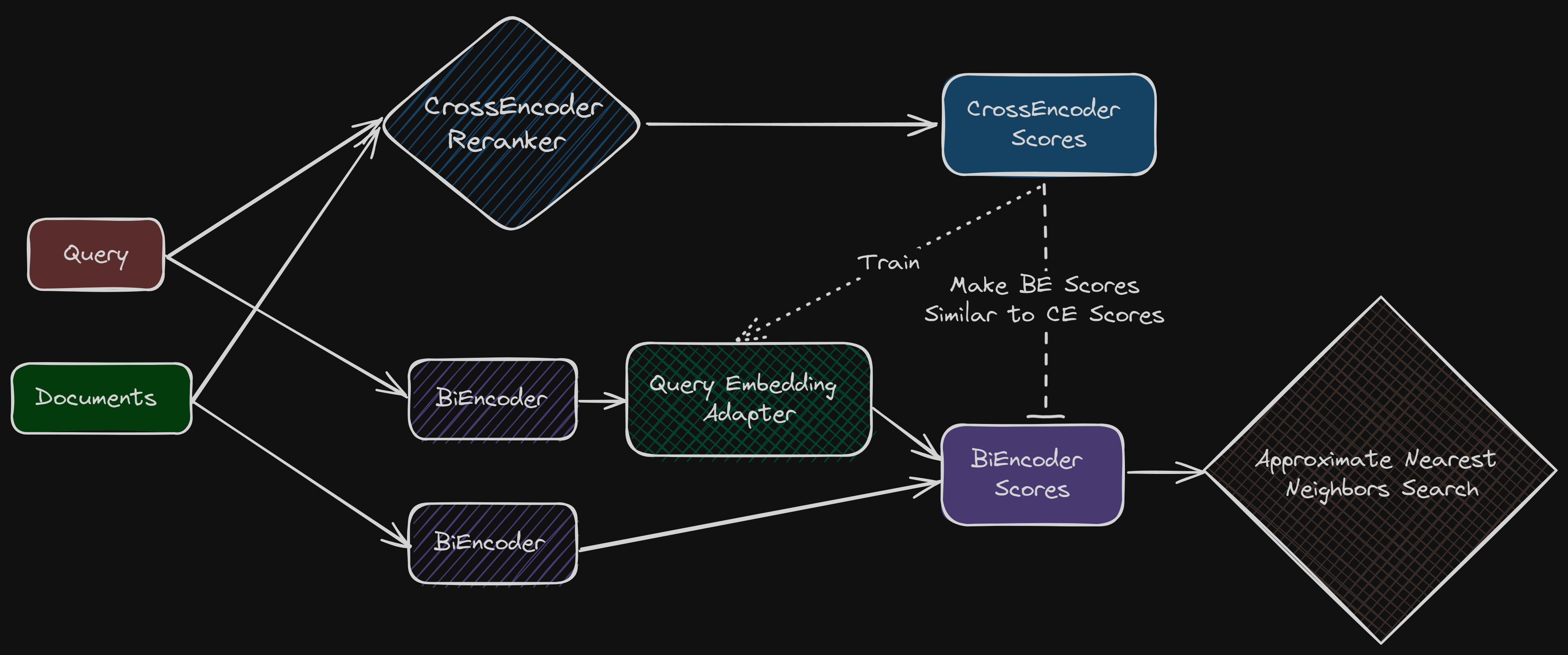

The quality of a reranked retreiver and the speed of a bi-encoder retreiver!