Recent advances in AI are breathing new life into podcasting! The Whisper speech-to-text model is a game-changer! It can transcribe podcasts with astonishing accuracy, allowing us to index them into Weaviate! I have been hosting the Weaviate podcast for a little over a year with 34 published episodes and am super excited to tell you how this will completely revamp our podcast, as well as the details behind how to build something like this for yourself.

Podcasts are easy to consume, we can listen to podcasts as we drive to work, take a walk, or play a video game. However, despite their convenience, podcasts have lacked a crucial aspect that other knowledge base mediums have - the ability to easily reference and search past content.

So let’s dive into how to build it, see some queries, and then come back to how this will change podcasting!

Whisper

OpenAI has taken a swing at unleashing the potential of AI technology, breaking open a piñata of new applications. Among the bounty of treats spilling out, podcasting shines bright like a glittering candy! - Written by ChatGPT

!pip install git+https://github.com/openai/whisper.git

import whisper

# available models = ["tiny.en", "tiny", "base.en", "base", "small.en", "small", "medium.en", "medium", "large-v1", "large-v2", "large"]

model = whisper.load_model("large-v2").to("cuda:0")

import time

start = time.time()

result = model.transcribe("20.mp3")

print(f"Transcribed in {time.time() - start} seconds.")

f = open("20-text-dump.txt", "w+")

f.write(result["text"])

f.close()

From here you get a big text file from the podcast. So firstly, the obvious one is annotating who is speaking. Finally, although I am certainly in line to sing the praises of Whisper, some manual effort came in with reading through the podcasts to correct some things like “Weave8”, “Bird Topic”, and “HTTP scan”. I think it would be fairly easy to fine-tune Whisper on these corrections, but I think the bulk of the work is behind me now. However I imagine fine-tuning Whisper will be important to do for podcasts with hundreds of published episodes.

Into Weaviate

If you are already familiar with Weaviate, you can simply clone the repository here and get going with:

docker compose up -d

python3 restore.py

This will restore a backup that contains the data that has been uploaded and indexed in Weaviate.

In case this is your first time using Weaviate, check out the Quickstart tutorial to get started!

Queries

Now for the fun part, let’s answer some queries!!

| Query | Neural Search Pyramid |

|---|---|

| Top Result | Oh, yeah, that's an excellent question. And maybe I can also introduce, like I created this, you know, mental diagram for myself and I kept selling it everywhere and it seems like people get it and some of them even like to reach out on LinkedIn and say, hey, I got your vector search pyramid… Dmitry Kan, #32 |

| Query | Replication |

|---|---|

| Top Result | Yeah, absolutely. So when I first started at the company, we had a milestone. It was like the last milestone of the roadmap, which was replication. And it seemed so far away, such a monumental task at the time, right? We didn't really have anything to support that at the time that I joined. So over time, we've built towards replication. For example, starting with backups, first, we introduced the ability to backup whatever's in your Weaviate instance, maybe on a single node. And then that evolved into distributed backups, which allows you to backup a cluster. And all of this was working towards the ability to automatically replicate or to support replication… Parker Duckworth, #31 |

We can get even better results by filtering our searches with the speaker and podNum properties. For example, you can filter search queries about You.com and Weaviate from Sam Bean like this:

{

Get {

PodClip(

where: {

path: ["speaker"],

operator: Equal,

valueText: "Sam Bean"

}

nearText: {

concepts: ["How does You.com use Weaviate?"]

}

){

content

speaker

podNum

}

}

}

We can also filter on podcast numbers. Weaviate podcast #30 “The Future of Search” with Bob van Luijt, Chris Dossman, and Marco Bianco was the first podcast to come out after ChatGPT was released!

{

Get {

PodClip(

where: {

path: "podNum"

valueInt: 30

operator: GreaterThanEqual

}

nearText: {

concepts: ["ChatGPT and Weaviate"]

}

){

content

speaker

podNum

}

}

}

Using the where filter with hybrid search could improve the search results. This feature will be added to the Weaviate 1.18 release.

The Future of Podcasting UX

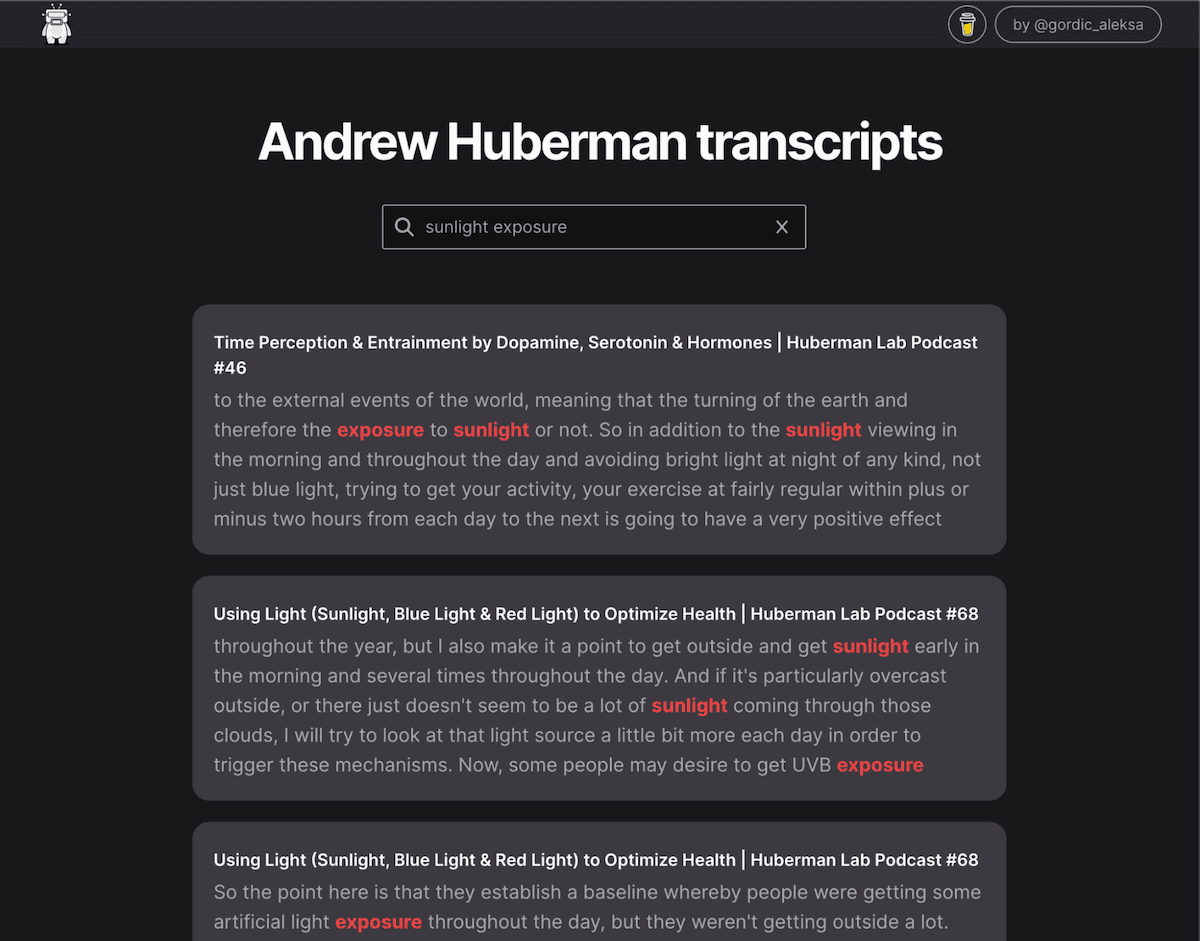

So firstly, Aleksa Gordcic beat me to the punch with his amazing hubermantranscripts.com! He even used Weaviate 🤣! I remember seeing something Dr. Huberman had recommended about sunlight exposure. I have recently moved from Florida to Boston and am a little bit worried about the shorter daylight. I also usually roll out of bed, stop at the coffee machine, and head to the computer (long live remote work!). I asked Aleksa’s app about sunlight exposure and boom I was able to instantly find this knowledge! Maybe time to switch up my morning routine!

In terms of the user experience for a single podcast... I think this is an incremental improvement over “chapter” annotations that allows you to skip ahead on YouTube videos. That isn’t to undermine the improvement though; it is quite tedious to write detailed descriptions for each chapter. Plus long descriptions don't look so nice with YouTube’s Chapter interface.

I think the real innovation in the podcasting user experience is in a collection of podcasts. A collection of podcasts now achieves knowledge base reference functionality, similar to code documentation or survey papers!

Further I think this will transform podcast recommendation. One way of doing this could be to use each chunk of a podcast to search across all the atomic chunks of other podcasts. Or we could construct a single embedding for the entire podcast episode.

This is one of my favorite applications of our new ref2vec module. The first solution to ref2vec describes constructing Podcast – (hasSegment) → Segment cross-references, and then averaging the vectors of the segments to form the Podcast vector. We are exploring additional aggregation ideas as well such as:

Ref2vec-centroids: Cluster the return multiple vectors to represent

Podcast. This also requires solving how we want to add multi-vector object representations to Weaviate.Graph neural network aggregation: Graph neural networks are a perfect functional class for aggregating a variable amount of edges. For example one podcast may have 20 segments, and another one 35!

LLM Summarize: Parse through the referenced content and ask the LLM to write a summary of it, with the idea being we then embed this summary.

Stay tuned for more updates on ref2vec!

Dog-fooding

“Dog-fooding” describes learning about your product by using it, as in eating your own dog food. There are 3 main features in development at Weaviate that I am super excited to work on, with the Weaviate Podcast serving as the dataset.

Weaviate’s

GeneratemoduleWeaviate’s new

generatemodule combines the power of Large Language Models, such as ChatGPT, with Weaviate! I don’t want to give away too much of this yet, but please stay tuned for Weaviate Air #5, for the celebration of this new Weaviate module and some trick shots using the Weaviate Podcast as the dataset!Custom Models

Since joining Weaviate I have been fascinated with the conversation around zero-shot versus fine-tuned embedding models. Particularly from my conversation with Nils Reimers, I have become very interested in the continual learning nature of this. For example, when we released the

ref2vecmodule and discussed it on the podcast, theall-miniLM-L6-v2model has never seen ref2vec before in its training set. Additionally, a model fine-tuned up to podcast #30 will have never seen ref2vec either!I am also very interested in the fine-tuning of cross-encoder models, which you can learn more about here.

Custom Benchmarking

I have also been working on the BEIR benchmarking in Weaviate (nearly finished!). This has inspired me to think about benchmarking search performance and how this is relevant to Weaviate users. As a quick introduction, this describes constructing a set of “gold” documents per query that we want the system to return. We then use metrics like hits at k, recall, ndcg, and precision to report how well the system was able to do this.

I have spoken with a few Weaviate users who typically evaluate search performance with a small set of manually annotated queries and relevant documents. A new breakthrough is emerging in the intersection of Large Language Models and Search, in addition to the more flashy retrieval-augmented ChatGPT, etc., using the Language Models to generate training or testing data for any given dataset, led by papers such as InPars, Promptagator, and InPars-Light.

All you do is ask ChatGPT to please generate a query that would match the information in this document. There are a little more details to this such as roundtrip consistency filtering, so stay tuned for our Weaviate podcast with Leo Boystov to learn more about this!

Concluding thoughts on Podcasting

I think there are a lot of obvious benefits for making a podcast. It is a great way to meet people for the joint sake of collaboration on content and overall developing friendships and partnerships. It is a great intellectual exercise to explore these ideas with someone who has a different perspective than you do. I would also add that podcasting has a nice fact verification component to it compared to a solo content medium like blogging. For example, if I make some wild claim about embedding models while interviewing Nils Reimers, he will probably respond with a shocked reaction and refute the claim. I am very happy to have taken up podcasting and super excited about this new ability to build a knowledge base from the podcasts!

Ready to start building?

Check out the Quickstart tutorial, or build amazing apps with a free trial of Weaviate Cloud (WCD).

Don't want to miss another blog post?

Sign up for our bi-weekly newsletter to stay updated!

By submitting, I agree to the Terms of Service and Privacy Policy.