Integrations

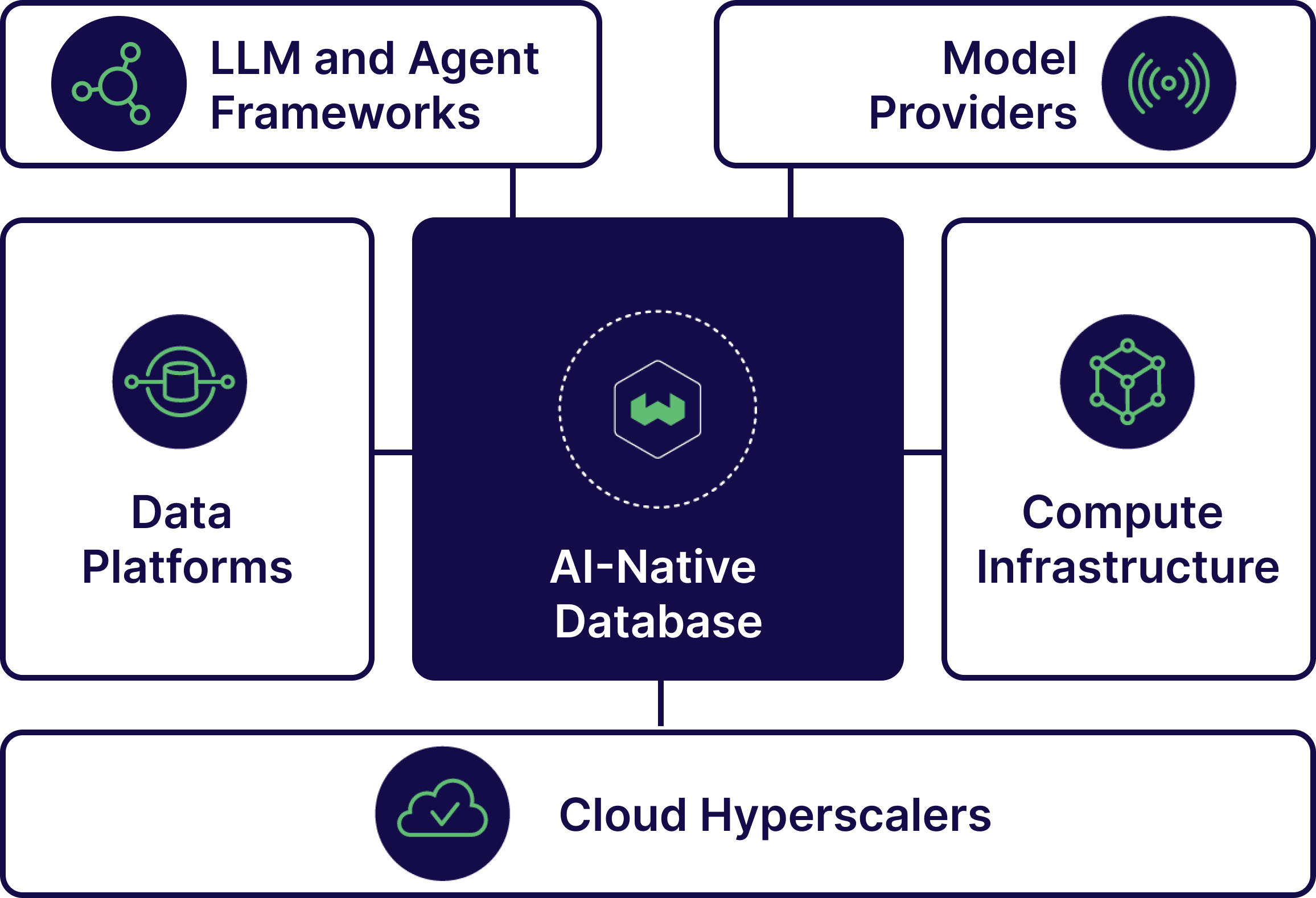

Weaviate's integration ecosystem enables developers to build various applications leveraging Weaviate and another technology.

All the notebooks and code examples are on Weaviate Recipes!

About the Categories

The ecosystem is divided into these categories:

- Cloud Hyperscalers - Large-scale computing and storage

- Compute Infrastructure - Run and scale containerized applications

- Data Platforms - Data ingestion and web scraping

- LLM and Agent Frameworks - Build agents and generative AI applications

- Operations - Tools for monitoring and analyzing generative AI workflows

List of Companies

| Company Category | Companies |

|---|---|

| Cloud Hyperscalers | AWS, Google |

| Compute Infrastructure | Modal, Replicate |

| Data Platforms | Airbyte, Aryn, Boomi, Box, Confluent, Astronomer, Context Data, Databricks, Firecrawl, IBM, Unstructured |

| LLM and Agent Frameworks | Agno , Composio, CrewAI, DSPy, Dynamiq, Haystack, LangChain, LlamaIndex, Semantic Kernel |

| Operations | AIMon, Arize, Cleanlab, Comet, DeepEval, Langtrace, LangWatch, Nomic, Patronus AI, Ragas, TruLens, Weights & Biases |

Model Provider Integrations

Weaviate integrates with self-hosted and API-based embedding models from a range of providers.

Refer to the documentation page to see the full list of model providers.