Using Weaviate Cloud Queries in MacOS apps

A practical guide on using Weaviate Cloud Queries in MacOS apps.

A practical guide on using Weaviate Cloud Queries in MacOS apps.

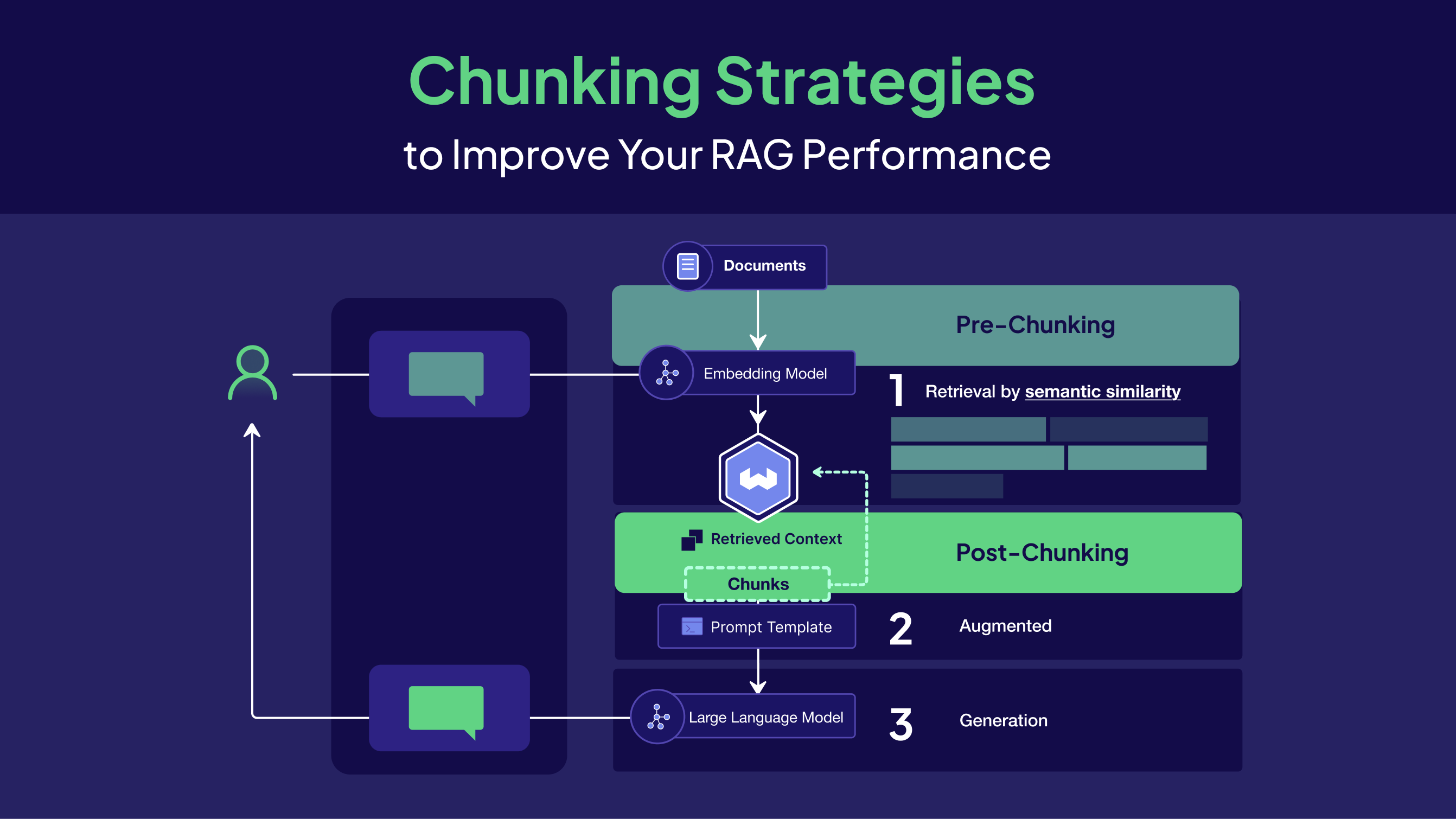

Learn how chunking strategies improve LLM RAG pipelines, retrieval quality, and agent memory performance across production AI systems.

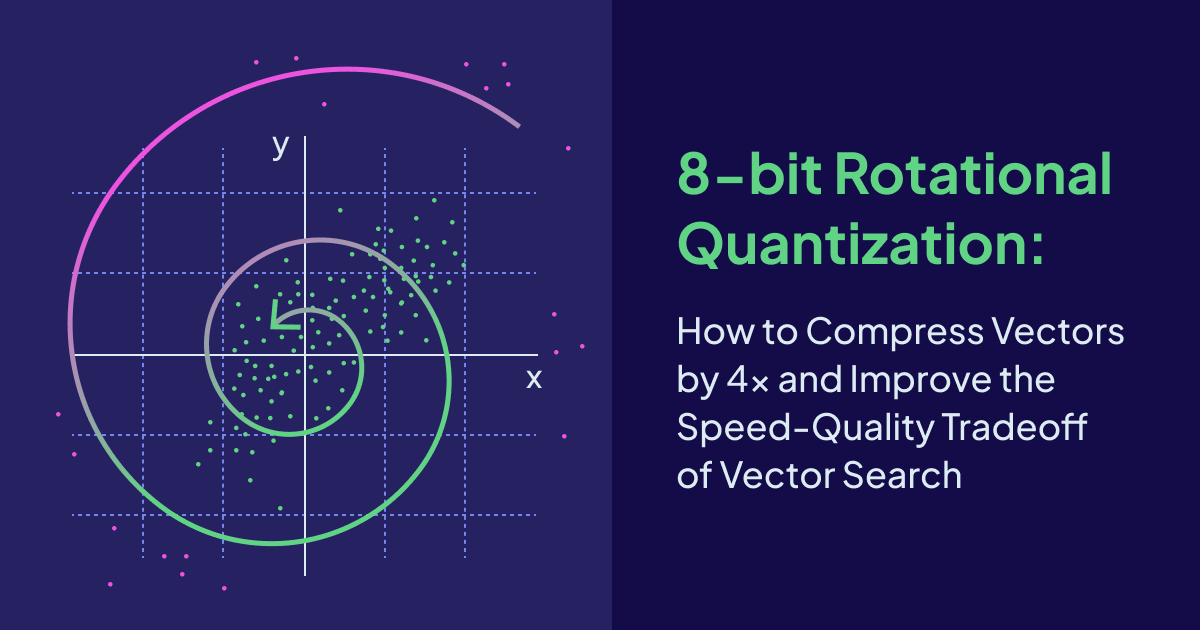

Get spun around by our new vector quantization algorithm that utilizes the power of random rotations to improve the speed-quality tradeoff of vector search with Weaviate.

Evals and Guardrails in enterprise workflows part 1

Elysia is an open-source, decision tree-based agentic RAG framework that dynamically displays data, learns from user feedback, and chunks documents on-demand. Built with pure Python logic and powered by Weaviate, it's designed to be the next evolution beyond traditional text-only AI assistants.

Key consideration for customizing an embedding model through fine-tuning it on company- or domain-specific data to improve the downstream retrieval performance in RAG applications.

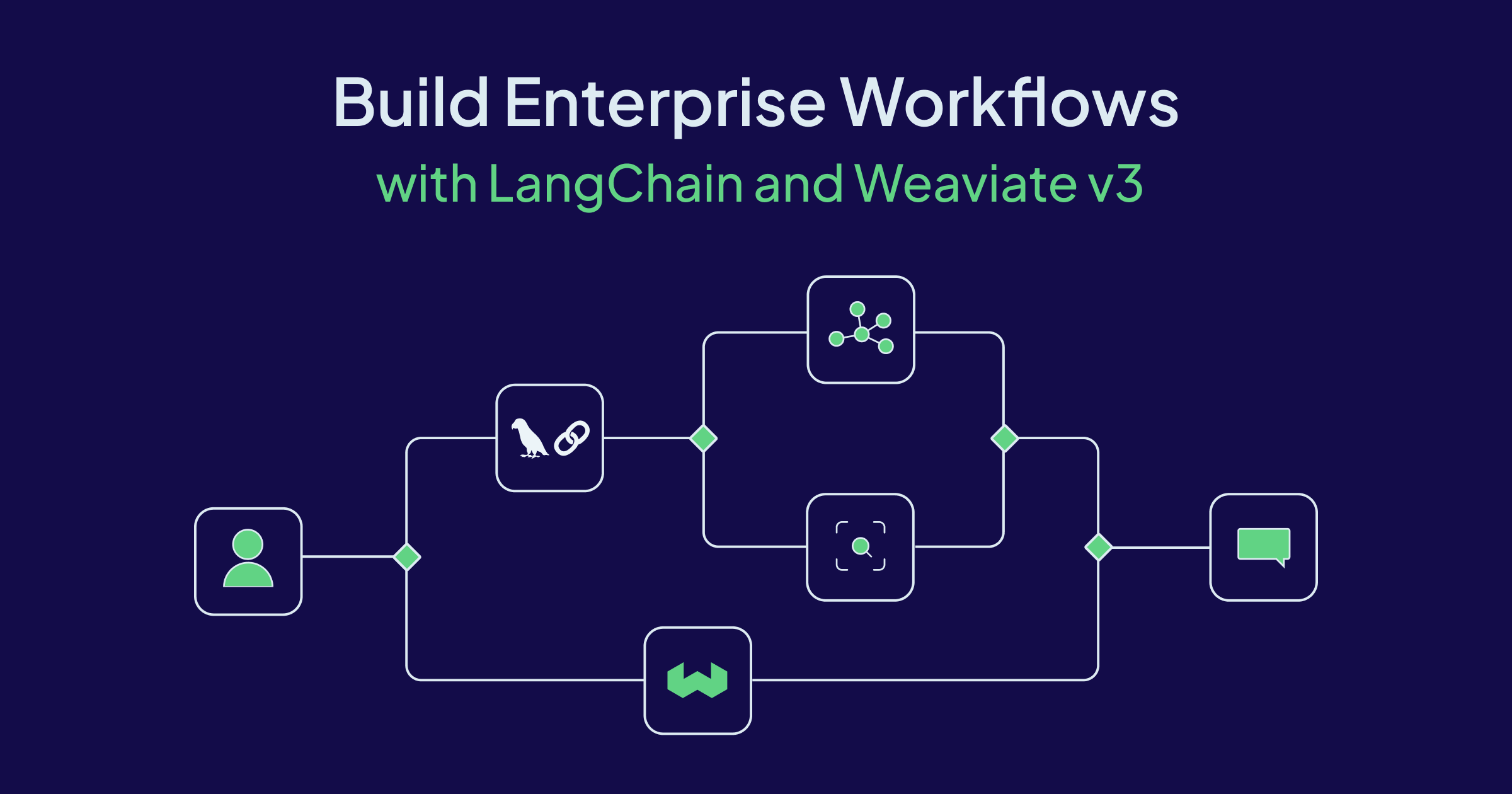

LangChain and Weaviate v3 power scalable AI with fast, type-safe RAG workflows, practical code, and future-ready features like LLM eval and event-driven design.

You can now use Weaviate with n8n for no-code agentic workflows. This article teaches you how.

1.32 adds collection aliases for no-downtime collection migrations, efficient & powerful rotational quantization (RQ), GA replica movement, memory reduction with compressed HNSW connections and more!

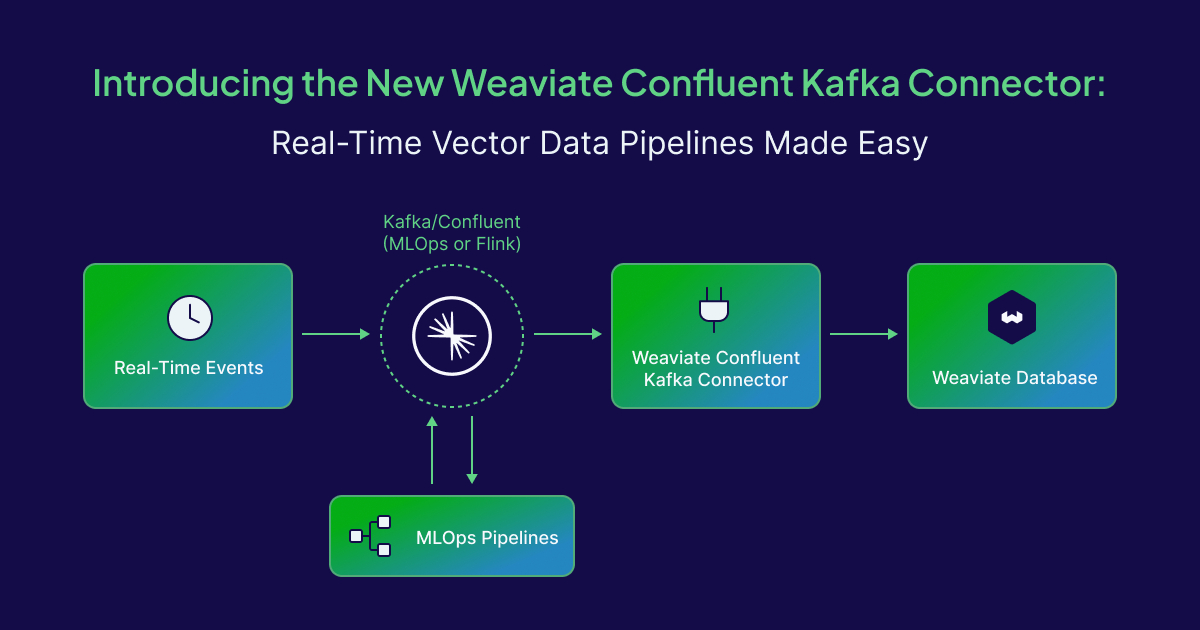

Learn about the new certified Weaviate Confluent Apache Kafka Connector!

Design for speed, build for experience.

Glowe is a Korean beauty recommendation app that uses domain knowledge agents, custom embedding strategies, and vector search to create personalized skincare routines. Try now: https://www.glowe.app/