Searches

As collections with named vectors can include multiple vector embeddings, any vector or similarity search must specify a "target" vector.

This applies for near_text and near_vector searches, as well as multimodal searches such as near_image and so on. Let's explore a few examples here.

Text searches

Code

Here, we look for entries in "MovieNVDemo" based on their similarity to the phrase "A joyful holiday film". Note, however, that we show multiple versions of the same query, each with a different target_vector parameter:

- Title

- Overview

- Poster

import weaviate

import weaviate.classes.query as wq

import os

# Instantiate your client (not shown). e.g.:

# headers = {"X-OpenAI-Api-Key": os.getenv("OPENAI_APIKEY")} # Replace with your OpenAI API key

# client = weaviate.connect_to_local(headers=headers)

# Get the collection

movies = client.collections.get("MovieNVDemo")

# Perform query

response = movies.query.near_text(

query="A joyful holiday film",

target_vector="title", # The target vector to search against

limit=5,

return_metadata=wq.MetadataQuery(distance=True),

return_properties=["title", "release_date", "tmdb_id", "poster"]

)

# Inspect the response

for o in response.objects:

print(

o.properties["title"], o.properties["release_date"].year, o.properties["tmdb_id"]

) # Print the title and release year (note the release date is a datetime object)

print(

f"Distance to query: {o.metadata.distance:.3f}\n"

) # Print the distance of the object from the query

client.close()

import weaviate

import weaviate.classes.query as wq

import os

# Instantiate your client (not shown). e.g.:

# headers = {"X-OpenAI-Api-Key": os.getenv("OPENAI_APIKEY")} # Replace with your OpenAI API key

# client = weaviate.connect_to_local(headers=headers)

# Get the collection

movies = client.collections.get("MovieNVDemo")

# Perform query

response = movies.query.near_text(

query="A joyful holiday film",

target_vector="overview", # The target vector to search against

limit=5,

return_metadata=wq.MetadataQuery(distance=True),

return_properties=["title", "release_date", "tmdb_id", "poster"]

)

# Inspect the response

for o in response.objects:

print(

o.properties["title"], o.properties["release_date"].year, o.properties["tmdb_id"]

) # Print the title and release year (note the release date is a datetime object)

print(

f"Distance to query: {o.metadata.distance:.3f}\n"

) # Print the distance of the object from the query

client.close()

import weaviate

import weaviate.classes.query as wq

import os

# Instantiate your client (not shown). e.g.:

# headers = {"X-OpenAI-Api-Key": os.getenv("OPENAI_APIKEY")} # Replace with your OpenAI API key

# client = weaviate.connect_to_local(headers=headers)

# Get the collection

movies = client.collections.get("MovieNVDemo")

# Perform query

response = movies.query.near_text(

query="A joyful holiday film",

target_vector="poster_title", # The target vector to search against

limit=5,

return_metadata=wq.MetadataQuery(distance=True),

return_properties=["title", "release_date", "tmdb_id", "poster"]

)

# Inspect the response

for o in response.objects:

print(

o.properties["title"], o.properties["release_date"].year, o.properties["tmdb_id"]

) # Print the title and release year (note the release date is a datetime object)

print(

f"Distance to query: {o.metadata.distance:.3f}\n"

) # Print the distance of the object from the query

client.close()

Explain the code

Each named vector here is based on a different property of the movie data.

The first search compares the meaning of the movie title with the query, the second search compares the entire summary (overview) with the query, and the third compares the poster (and the title) with the query.

Weaviate also allows each named vector to be set with a different vectorizer. You will recall that the poster_title vector is created by the CLIP models, while the title and overview properties are created by the OpenAI model.

As a result, each named vector can be further specialized by using the right model for the right property.

Explain the results

The results of each search are different, as they are based on different properties of the movie data.

title vs overview

Note that the search with the overview target vector includes titles like "Home Alone" and "Home Alone 2: Lost in New York", which are not included in the other searches.

This is because the plots of these movies are holiday-themed, even though the titles are not obviously joyful or holiday-related.

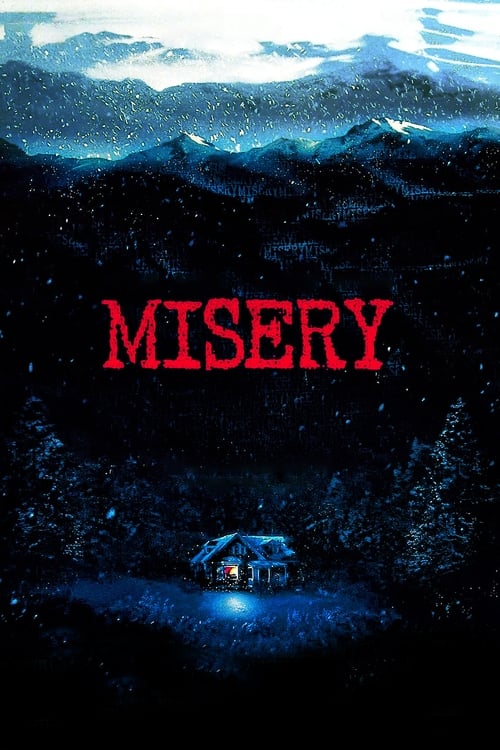

poster

The search with poster_title target vector interestingly includes "Misery" - the Stephen King horror movie! This is very likely because the poster of the movie is a snowy scene. And since the CLIP vectorizer is trained to identify elements of images, it identifies this terrifying film as a result of the search.

Given the imagery of the poster only and no other context, you would have to say that the search isn't wrong, even though anyone who's read the book or watched the movie would agree.

- Title

- Overview

- Poster

How the Grinch Stole Christmas 2000 8871

Distance to query: 0.162

The Nightmare Before Christmas 1993 9479

Distance to query: 0.177

The Pursuit of Happyness 2006 1402

Distance to query: 0.182

Jingle All the Way 1996 9279

Distance to query: 0.184

Mrs. Doubtfire 1993 788

Distance to query: 0.189

How the Grinch Stole Christmas 2000 8871

Distance to query: 0.148

Home Alone 1990 771

Distance to query: 0.164

Onward 2020 508439

Distance to query: 0.172

Home Alone 2: Lost in New York 1992 772

Distance to query: 0.175

Little Miss Sunshine 2006 773

Distance to query: 0.176

Posters for the top 5 matches:

Life Is Beautiful 1997 637

Distance to query: 0.621

Groundhog Day 1993 137

Distance to query: 0.623

Jingle All the Way 1996 9279

Distance to query: 0.625

Training Day 2001 2034

Distance to query: 0.627

Misery 1990 1700

Distance to query: 0.632

Hybrid search

Code

This example finds entries in "MovieNVDemo" with the highest hybrid search scores for the term "history", and prints out the title and release year of the top 5 matches.

import weaviate

import weaviate.classes.query as wq

import os

# Instantiate your client (not shown). e.g.:

# headers = {"X-OpenAI-Api-Key": os.getenv("OPENAI_APIKEY")} # Replace with your OpenAI API key

# client = weaviate.connect_to_local(headers=headers)

# Get the collection

movies = client.collections.get("MovieNVDemo")

# Perform query

response = movies.query.hybrid(

query="history",

target_vector="overview", # The target vector to search against

limit=5,

return_metadata=wq.MetadataQuery(score=True)

)

# Inspect the response

for o in response.objects:

print(

o.properties["title"], o.properties["release_date"].year

) # Print the title and release year (note the release date is a datetime object)

print(

f"Hybrid score: {o.metadata.score:.3f}\n"

) # Print the hybrid search score of the object from the query

client.close()

Explain the code

Hybrid search with named vectors works the same way as other vector searches with named vectors. You must provide a target_vector parameter to specify the named vector for the vector search component of the hybrid search.

Keyword search

As named vectors affect the vector representations of objects, they do not affect keyword searches. You can perform keyword searches on named vector collections using the same syntax as you would for any other collections.

Named vectors in search

The use of named vectors enables flexible search options that can be tailored to your needs.

Each object can have as many named vectors as you would like, with any combinations of properties and vectorizers, or even multiple custom vectors provided by your own models.

This flexibility allows you to create databases with vector representations that are tailored to your specific use case, and to search for similar items based on any combination of properties.

What about RAG?

RAG, or retrieval augmented generation, queries with named vectors work the same way as with other vector searches with named vectors. You must provide a target_vector parameter to specify the named vector for the vector search component of the RAG query.

This, in turn, can improve the quality of the generation. Let's explore a few examples in the next section.

Questions and feedback

If you have any questions or feedback, let us know in the user forum.