- There are two fusion algorithms available in Weaviate:

rankedFusionandrelativeScoreFusion. rankedFusionwas the default algorithm until 1.24relativeScoreFusionis the newer algorithm, introduced in 1.20 and made default in 1.24, and likely the better choice for most.- We would love to get your feedback on hybrid search. Please fill out this short survey.

As you might know already, Weaviate can perform many different types of searches, including vector search and keyword search. Vector search is based on similarities of meaning to the input, whereas keyword search is based on how often the input words occur in the results.

Vector and keyword based search each have their strengths and weaknesses that arise from this difference, where vector search is more forgiving semantically and keyword search is more precise. Hybrid search enables a "best-of-both-worlds" type capability using both of these search types.

That probably sounds simple enough. But do you know how hybrid search combines these results? And that Weaviate recently added a new algorithm for how this is done?

In this post, we’ll dive into exactly the world of hybrid search to discuss how it works, how results are produced, the algorithms used, and more. So let’s get into it!

- Vector search and keyword search are also known as dense vector search and sparse vector search respectively.

- Keyword search is also called a BM25 search in Weaviate, as it is based on the BM25F scoring algorithm.

How does hybrid search work, exactly?

Here is an example of a hybrid search:

response = (

client.query

.get("JeopardyQuestion", ["question", "answer"])

.with_hybrid(query="food", alpha=0.5)

.with_limit(5)

.do()

)

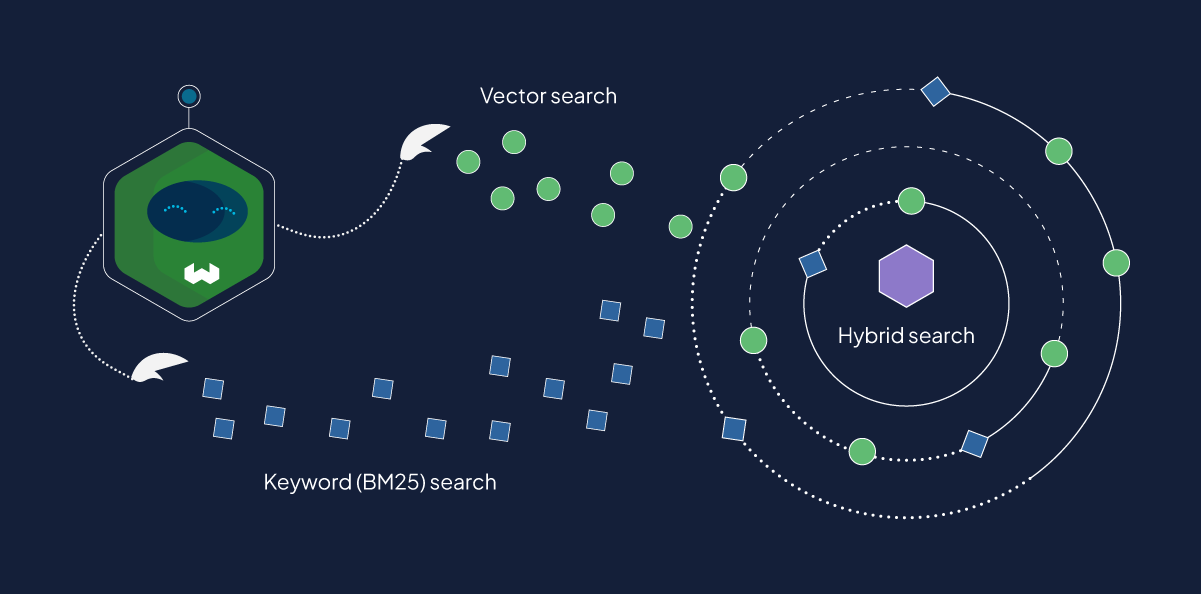

As mentioned, a hybrid search is really two searches under-the-hood. It performs a vector search (similar to nearText or nearVector in Weaviate) to find most similar objects to the vector of your query. Meanwhile, it also performs a keyword search, which ranks results based on how often the query terms occur.

In other words, a hybrid search performs both of these searches and combines the results.

- Vector search

- Keyword search

response = (

client.query

.get("JeopardyQuestion", ["question", "answer"])

.with_near_text({"concepts": ["food"]})

.with_limit(5)

.do()

)

response = (

client.query

.get("JeopardyQuestion", ["question", "answer"])

.with_bm25(query="food")

.with_limit(5)

.do()

)

Each of these searches will produce results like these:

- Vector search

- Keyword search

{

"data": {

"Get": {

"JeopardyQuestion": [

{

"answer": "a closer grocer",

"question": "A nearer food merchant"

},

{

"answer": "Famine",

"question": "From the Latin for \"hunger\", it's a period when food is extremely scarce"

},

{

"answer": "Tofu",

"question": "A popular health food, this soybean curd is used to make a variety of dishes & an ice cream substitute"

},

{

"answer": "gastronomy",

"question": "This word for the art & science of good eating goes back to Greek for \"belly\""

},

{

"answer": "devour flour",

"question": "Voraciously eat an \"all-purpose\" baking ingredient"

}

]

}

}

}

{

"data": {

"Get": {

"JeopardyQuestion": [

{

"answer": "food stores (supermarkets)",

"question": "This type of retail store sells more shampoo & makeup than any other"

},

{

"answer": "cake",

"question": "Devil's food & angel food are types of this dessert"

},

{

"answer": "a closer grocer",

"question": "A nearer food merchant"

},

{

"answer": "honey",

"question": "The primary source of this food is the Apis mellifera"

},

{

"answer": "Giraffe",

"question": "Acacia leaves are the favorite food of this tallest mammal"

}

]

}

}

}

As you see in the above examples, vector and keyword searches produce results with objects in different order to each other, if not different objects altogether. And if we inspect the results of our equivalent hybrid query, you’ll notice results from both vector and keyword search results.

{

"data": {

"Get": {

"JeopardyQuestion": [

{

"answer": "a closer grocer",

"question": "A nearer food merchant"

},

{

"answer": "food stores (supermarkets)",

"question": "This type of retail store sells more shampoo & makeup than any other"

},

{

"answer": "cake",

"question": "Devil's food & angel food are types of this dessert"

},

{

"answer": "Famine",

"question": "From the Latin for \"hunger\", it's a period when food is extremely scarce"

},

{

"answer": "Tofu",

"question": "A popular health food, this soybean curd is used to make a variety of dishes & an ice cream substitute"

}

]

}

}

}

So, how did they get there?

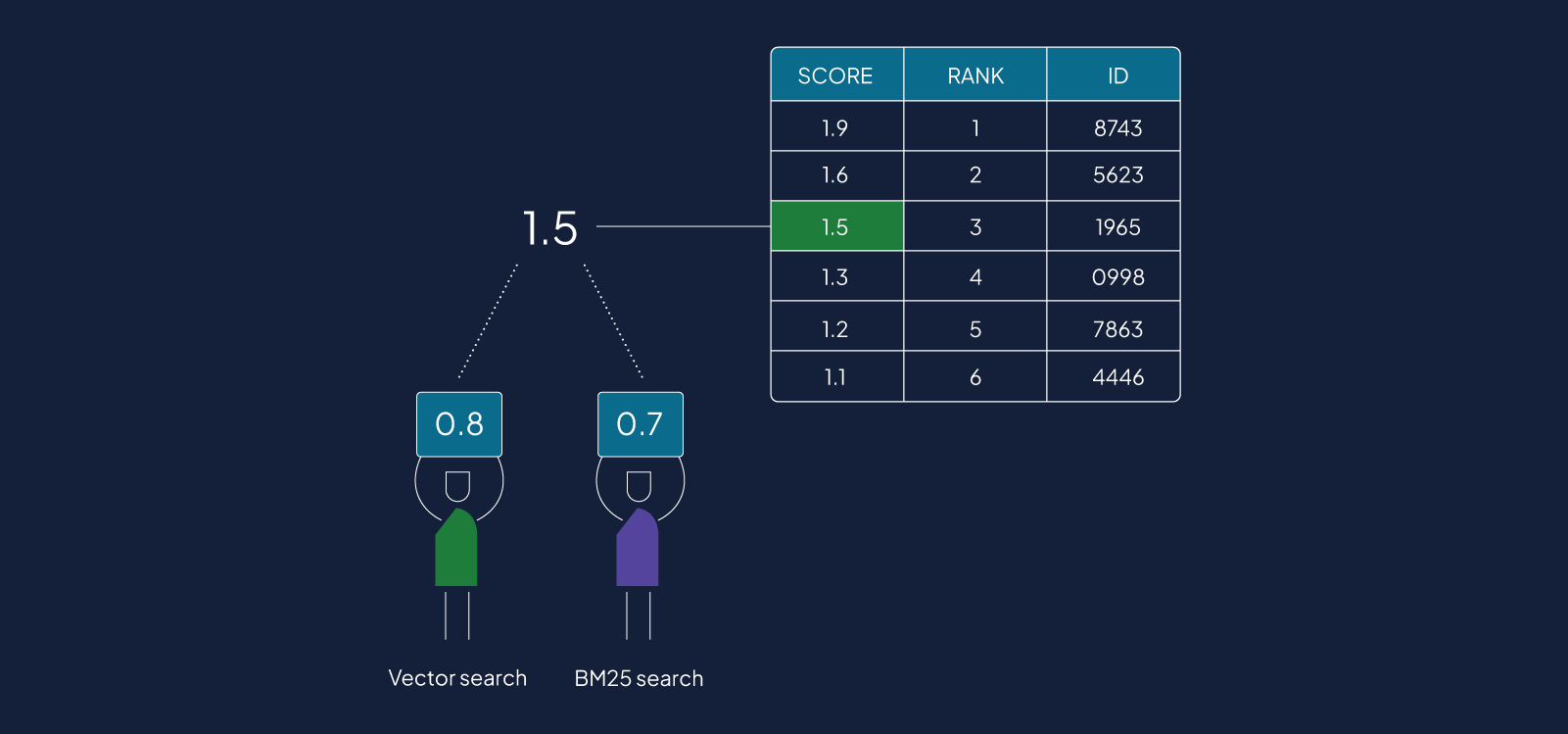

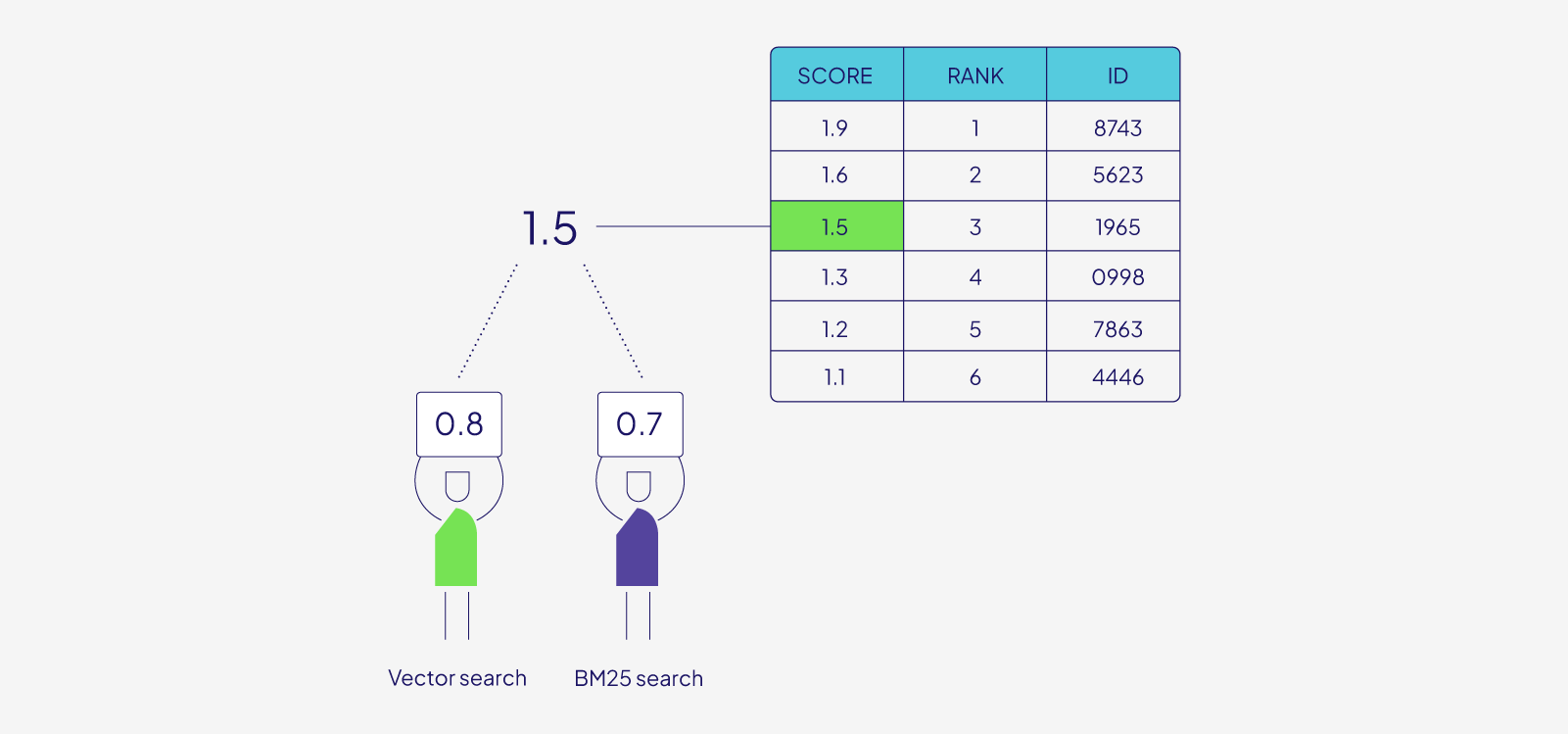

A short answer is that Weaviate calculates for each object a weighted score, using both result sets. But given that they are two very different search types, how might we combine any numerical outputs from each search? This is not a trivial decision due to the two search types producing different metrics to each other. In some ways, this is where the rubber meets the road, and thus the implementation here is an important part of the hybrid search story.

Hybrid searches can be ‘weighted’ to give more weight to the vector or keyword search. This is done using the alpha parameter. You can read more here.

Fusion algorithms

Each of the two (vector and keyword) searches returns a set of results with its own scores. These scores are then handed over to the selected fusion algorithm. A job of the fusion algorithm is to prepare the scores from each search to be compatible with each other, so that they can be weighted and added up and presented to the user.

As of 1.20, there are two algorithms available - one called rankedFusion (default until 1.24) and another called relativeScoreFusion, the default from 1.24.

rankedFusion

The rankedFusion algorithm is the original hybrid fusion algorithm that has been available since the launch of hybrid search in Weaviate.

In this algorithm, each object is scored according to its position in the results for the given search, starting from the highest score for the top-ranked object and decreasing down the order. The total score is calculated by adding these rank-based scores from the vector and keyword searches.

Now, let’s take a look at the newer relativeScoreFusion algorithm.

relativeScoreFusion

The relativeScoreFusion algorithm was added in Weaviate version 1.20.

In contrast to rankedFusion, however, relativeScoreFusion derives each objects score by normalizing the metrics output by the vector search and keyword search respectively. The highest value becomes 1, the lowest value becomes 0, and others end up in between according to this scale. The total score is thus calculated by a scaled sum of normalized vector similarity and normalized BM25 score.

Full example

After reviewing each algorithm, let’s go through a complete example to demonstrate the difference between them.

Base Search Results

Let's say that a search returns five objects with document id (from 0 to 4), and scores from keyword and vector search, ordered by score:

| Search Type | (id): score | (id): score | (id): score | (id): score | (id): score |

|---|---|---|---|---|---|

| Keyword | (1): 5 | (0): 2.6 | (2): 2.3 | (4): 0.2 | (3): 0.09 |

| Vector | (2): 0.6 | (4): 0.598 | (0): 0.596 | (1): 0.594 | (3): 0.009 |

Ranked Fusion

The score depends on the rank of each result and is computed according to 1/(RANK + 60), resulting in:

| Search Type | (id): score | (id): score | (id): score | (id): score | (id): score |

|---|---|---|---|---|---|

| Keyword | (1): 0.0154 | (0): 0.0160 | (2): 0.0161 | (4): 0.0167 | (3): 0.0166 |

| Vector | (2): 0.016502 | (4): 0.016502 | (0): 0.016503 | (1): 0.016503 | (3): 0.016666 |

As you can see, the results for each rank are identical, regardless of the input score.

Relative Score Fusion

Here, we normalize the scores – the largest score is set to 1 and the lowest to 0, and all entries in-between are scaled according to their relative distance to the maximum and minimum values.

| Search Type | (id): score | (id): score | (id): score | (id): score | (id): score |

|---|---|---|---|---|---|

| Keyword | (1): 1.0 | (0): 0.511 | (2): 0.450 | (4): 0.022 | (3): 0.0 |

| Vector | (2): 1.0 | (4): 0.996 | (0): 0.993 | (1): 0.986 | (3): 0.0 |

Here, the scores reflect the relative distribution of the original scores. For example, the vector search scores of the first 4 documents were almost identical, which is still the case for the normalized scores.

Summary

Before adding these scores up, they are weighted according to the alpha parameter. Let’s assume alpha=0.5, meaning both search types contribute equally to the final result and therefore each score is multiplied by 0.5.

Now, we can add the scores for each document up and compare the results from both fusion algorithms.

| Algorithm Type | (id): score | (id): score | (id): score | (id): score | (id): score |

|---|---|---|---|---|---|

| Ranked | (2): 0.016301 | (1): 0.015952 | (0): 0.015952 | (4): 0.016600 | (3): 0.016630 |

| Relative | (1): 0.993 | (0): 0.752 | (2): 0.725 | (4): 0.509 | (3): 0.0 |

What can we learn from this?

For the vector search, the scores for the top 4 objects (IDs 2, 4, 0, 1) were almost identical, and all of them were good results. While for the keyword search, one object (ID 1) was much better than the rest.

This is captured in the final result of relativeScoreFusion, which identified the object ID 1 the top result. This is justified because this document was the best result in the keyword search with a big gap to the next-best score and in the top group of vector search.

In contrast, for rankedFusion, the object ID 2 is the top result, closely followed by objects ID 1 and ID 0.

Which one to use?

Now that you know more about these two algorithms, you’re probably wondering this key question: which one to use, and when? Generally, we think that relativeScoreFusion might be a good choice.

The main reason is that relativeScoreFusion retains more information from the original searches than rankedFusion, which only retains the rankings. More generally we believe that the nuances captured in the vector and keyword search metrics are more likely to be reflected in rankings produced by relativeScoreFusion.

Here are some additional notes on how we see these two:

Recall performance / benchmarks

In developing these two algorithms, we carried out some internal benchmarks testing recall on a standard (FIQA) dataset. According to our internal benchmarks, the default relativeScoreFusion algorithm showed a ~6% improvement in recall over the rankedFusion method.

This is quite a significant improvement. So in the absence of specific characteristics of your dataset or a need to retain backwards compatibility with previous searches, relativeScoreFusion might be a good choice.

Use with AutoCut

In 1.20 we introduced the AutoCut feature, which can intelligently retrieve groups of objects from a search. AutoCut relies on there being natural "clusters" (groups of objects with close scores).

AutoCut works well with relativeScoreFusion, which often results in natural clusters that autocut can detect.

Why the default choice?

v1.24 the default method is Relative Score FusionGiven our earlier explanations, you might be wondering why rankedFusion was the default algorithm. In fact, currently we believe that relativeScoreFusion is more likely to be the better-performing algorithm.

The answer is that rankedFusion is the older, reliable choice that has worked quite well. In the meantime, we have been reviewing how relativeScoreFusion is received by the community, and making small tweaks like adding over-search to make it more robust.

So far, the reaction has been positive. But we are still in the evaluation phase, and we would love to get additional feedback from you, our users. We have prepared this short survey. We would really appreciate your input. Please let us know what you think!

Wrap-up

Hybrid search in Weaviate offers a powerful blend of vector and keyword search, using the strengths of both to deliver semantically rich results while respecting precision of keyword searches.

As we've explored, the introduction of relativeScoreFusion expands Weaviate’s hybrid search capabilities that began its life with the rankedFusion algorithm. We invite you to dive in, experiment with these fusion algorithms, and share your experiences.

Further resources

Ready to start building?

Check out the Quickstart tutorial, or build amazing apps with a free trial of Weaviate Cloud (WCD).

Don't want to miss another blog post?

Sign up for our bi-weekly newsletter to stay updated!

By submitting, I agree to the Terms of Service and Privacy Policy.