Ollama + Weaviate

The Ollama library allows you to easily run a wide range of models on your own device. Weaviate seamlessly integrates with the Ollama library, allowing users to leverage compatible models directly from the Weaviate database.

These integrations empower developers to build sophisticated AI-driven applications with ease.

Integrations with Ollama

Weaviate integrates with compatible Ollama models by accessing the locally hosted Ollama API.

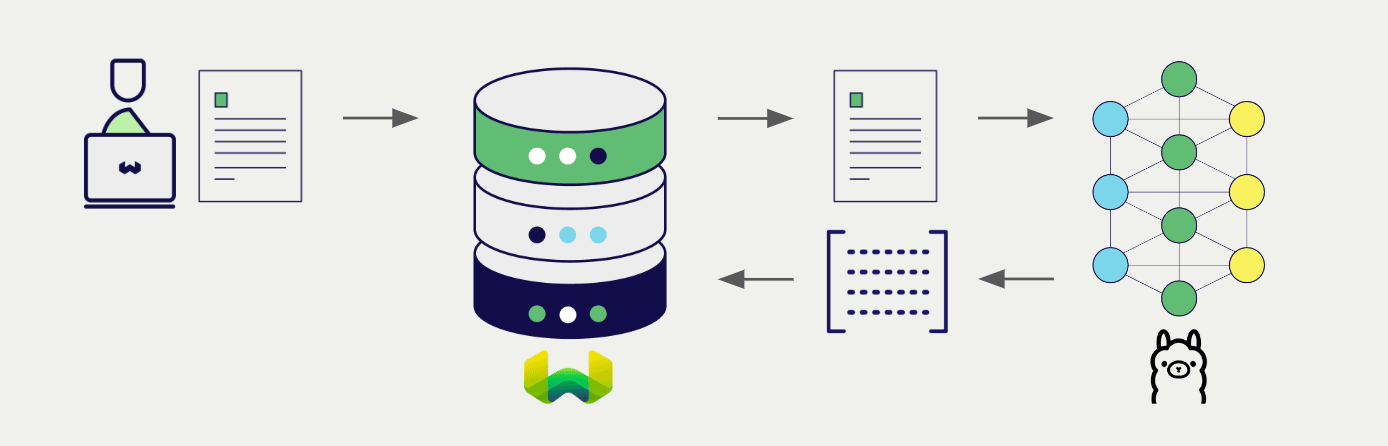

Embedding models for vector search

Ollama's embedding models transform text data into vector embeddings, capturing meaning and context.

Weaviate integrates with Ollama's embedding models to enable seamless vectorization of data. This integration allows users to perform semantic and hybrid search operations without the need for additional preprocessing or data transformation steps.

Ollama embedding integration page

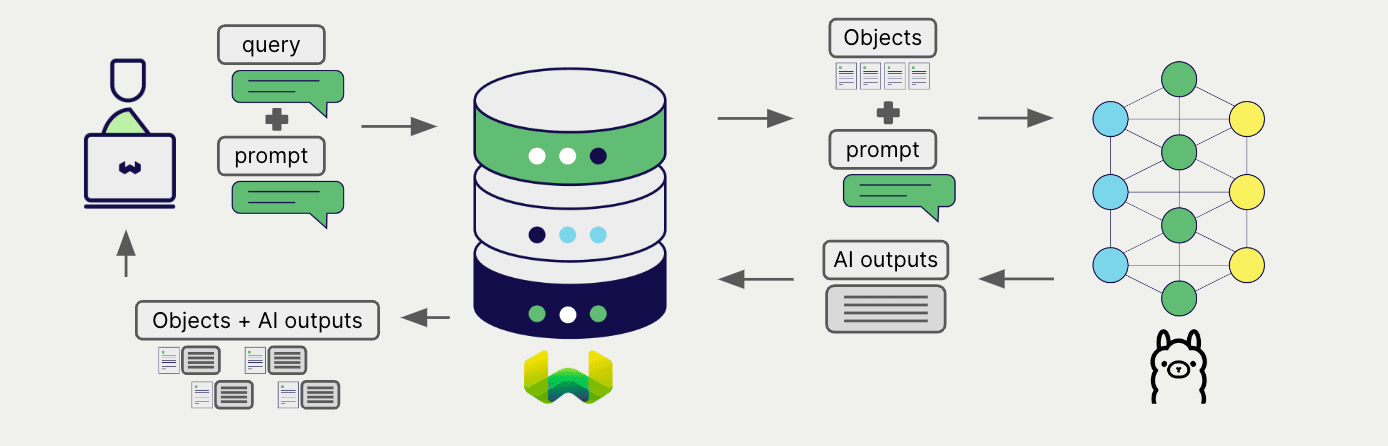

Generative AI models for RAG

Ollama's generative AI models can generate human-like text based on given prompts and contexts.

Weaviate's generative AI integration enables users to perform retrieval augmented generation (RAG) directly from the Weaviate database. This combines Weaviate's efficient storage and fast retrieval capabilities with Ollama's generative AI models to generate personalized and context-aware responses.

Ollama generative AI integration page

Summary

These integrations enable developers to leverage powerful Ollama models from directly within Weaviate.

In turn, they simplify the process of building AI-driven applications to speed up your development process, so that you can focus on creating innovative solutions.

Get started

A locally hosted Weaviate instance is required for these integrations so that you can host your own Ollama models.

Go to the relevant integration page to learn how to configure Weaviate with the Ollama models and start using them in your applications.

Questions and feedback

If you have any questions or feedback, let us know in the user forum.