OctoAI + Weaviate (Deprecated)

OctoAI integrations are deprecated

OctoAI announced that they are winding down the commercial availability of its services by 31 October 2024. Accordingly, the Weaviate OctoAI integrations are deprecated. Do not use these integrations for new projects.

If you have a collection that is using an OctoAI integration, consider your options depending on whether you are using OctoAI's embedding models (your options) or generative models (your options).

For collections with OctoAI embedding integrations

OctoAI provided thenlper/gte-large as the embedding model. This model is also available through the Hugging Face API.

After the shutdown date, this model will no longer be available through OctoAI. If you are using this integration, you have the following options:

Option 1: Use the existing collection, and provide your own vectors

You can continue to use the existing collection, provided that you rely on some other method to generate the required embeddings yourself for any new data, and for queries. If you are unfamiliar with the "bring your own vectors" approach, refer to this starter guide.

Option 2: Migrate to a new collection with another model provider

Alternatively, you can migrate your data to a new collection (read how). At this point, you can re-use the existing embeddings or choose a new model.

- Re-using the existing embeddings will save on time and inference costs.

- Choosing a new model will allow you to explore new models and potentially improve the performance of your application.

If you would like to re-use the existing embeddings, you must select a model provider (e.g. Hugging Face API) that offers the same embedding model.

You can also select a new model with any embedding model provider. This will require you to re-generate the embeddings for your data, as the existing embeddings will not be compatible with the new model.

For collections with OctoAI generative AI integrations

If you are only using the generative AI integration, you do not need to migrate your data to a new collection.

Follow this how-to to re-configure your collection with a new generative AI model provider. Note this requires Weaviate v1.25.23, v1.26.8, v1.27.1, or later.

You can select any model provider that offers generative AI models.

If you would like to continue to use the same model that you used with OctoAI, providers such as Anyscale, FriendliAI, Mistral or local models with Ollama each offer some of the suite of models that OctoAI provided.

How to migrate

An outline of the migration process is as follows:

- Create a new collection with the desired model provider integration(s).

- Export the data from the existing collection.

- (Optional) To re-use the existing embeddings, export the data with the existing embeddings.

- Import the data into the new collection.

- (Optional) To re-use the existing embeddings, import the data with the existing embeddings.

- Update your application to use the new collection.

See How-to manage data: migrate data for examples on migrating data objects between collections.

v1.25.0OctoAI offers a wide range of models for natural language processing and generation. Weaviate seamlessly integrates with OctoAI's APIs, allowing users to leverage OctoAI's models directly from the Weaviate database.

These integrations empower developers to build sophisticated AI-driven applications with ease.

Integrations with OctoAI

Embedding models for vector search

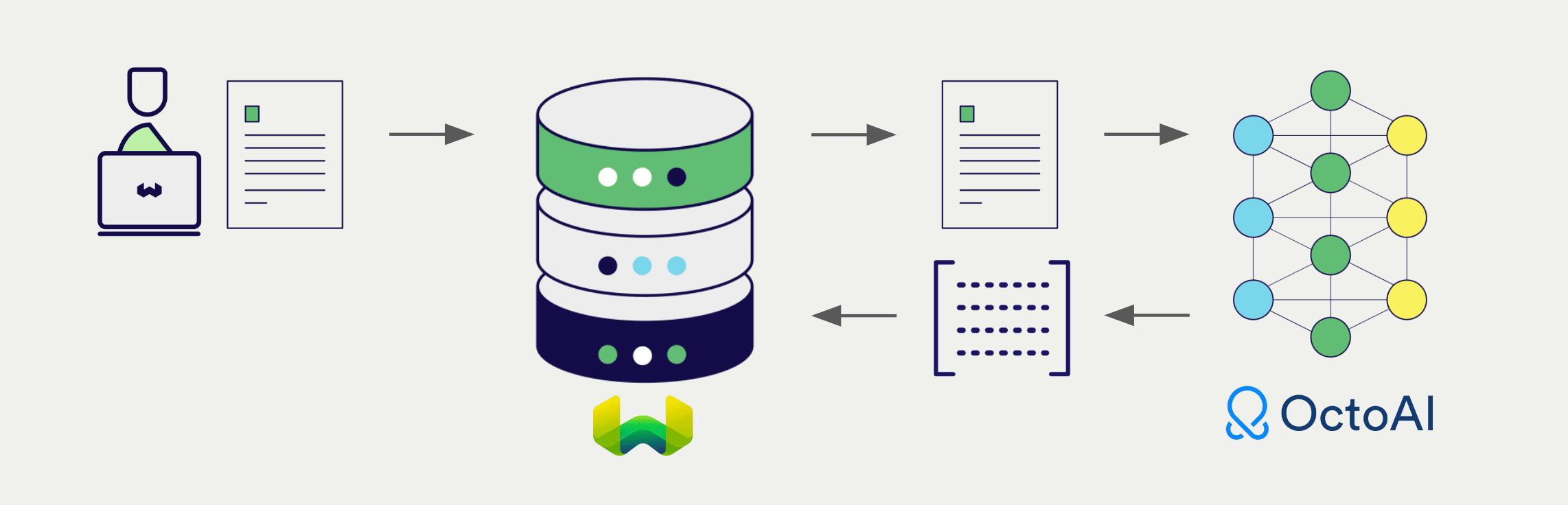

OctoAI's embedding models transform text data into vector embeddings, capturing meaning and context.

Weaviate integrates with OctoAI's embedding models to enable seamless vectorization of data. This integration allows users to perform semantic and hybrid search operations without the need for additional preprocessing or data transformation steps.

OctoAI embedding integration page

Generative AI models for RAG

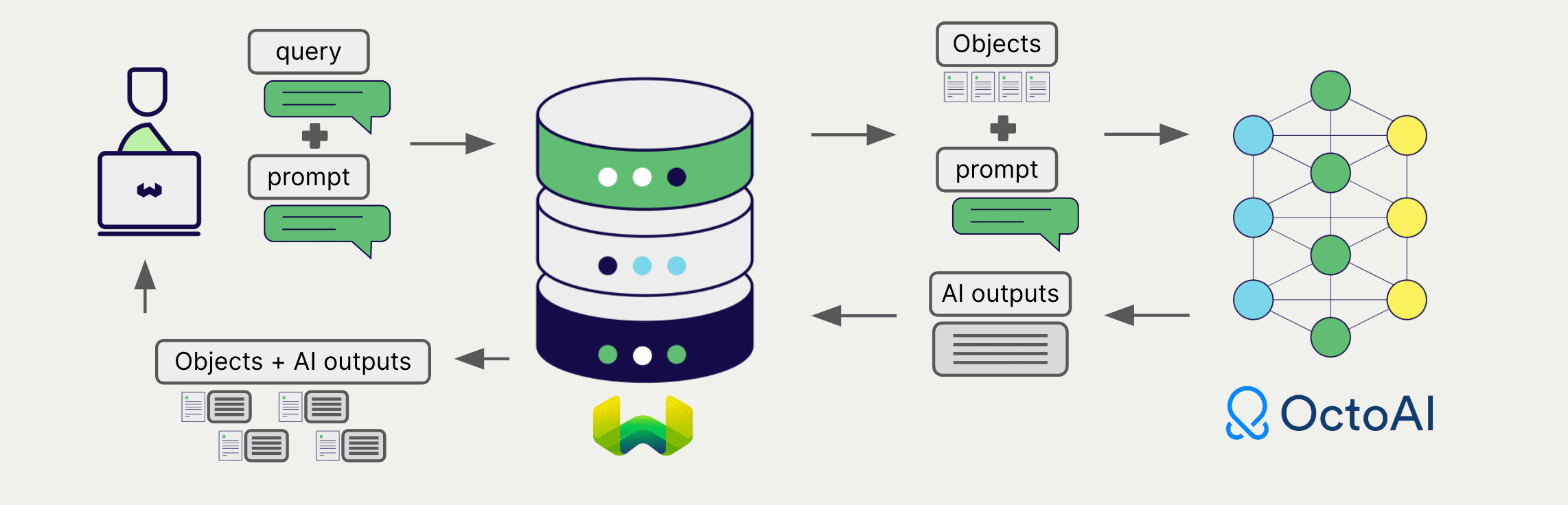

OctoAI's generative AI models can generate human-like text based on given prompts and contexts.

Weaviate's generative AI integration enables users to perform retrieval augmented generation (RAG) directly from the Weaviate database. This combines Weaviate's efficient storage and fast retrieval capabilities with OctoAI's generative AI models to generate personalized and context-aware responses.

OctoAI generative AI integration page

Summary

These integrations enable developers to leverage OctoAI's powerful models directly within Weaviate.

In turn, they simplify the process of building AI-driven applications to speed up your development process, so that you can focus on creating innovative solutions.

Get started

You must provide a valid OctoAI API key to Weaviate for these integrations. Go to OctoAI to sign up and obtain an API key.

Then, go to the relevant integration page to learn how to configure Weaviate with the OctoAI models and start using them in your applications.

Questions and feedback

If you have any questions or feedback, let us know in the user forum.